Choosing the Right Generative AI Model for Your Next Project

10 Oct 25

The $200,000 mistake is more common than you think.

Companies across industries are investing heavily in generative AI, but here’s what most won’t admit: they can’t tell the difference between actual innovation and repackaged technology. They’re choosing models based on marketing promises, not technical fit. And it’s costing them millions in wasted resources and lost opportunities.

The stakes have never been higher.

The generative AI market is racing toward $283.37 billion by 2034. Over 80% of enterprises plan to deploy GenAI solutions by 2026. In this rapid transformation, the companies that choose correctly will dominate their industries. Those that choose poorly? They’ll spend the next year explaining why their “revolutionary AI” performs worse than their competitors’.

But here’s the problem nobody’s talking about:

Every vendor claims their model is “cutting-edge.” Every solution promises “enterprise-grade performance.” Every demo looks impressive. So how do you actually know which model is right for your specific project?

This guide will help you navigate the complex world of generative AI models, understand their strengths and limitations, and make informed decisions that align with your project goals.

What Are Generative AI Models?

Before diving into selection criteria, let’s clarify what we’re actually choosing. A generative AI model is the computational engine, the mathematical architecture based on neural networks, that powers applications like ChatGPT, DALL-E, and Midjourney. It’s not the user interface you interact with, but the sophisticated brain working behind the scenes.

These models are trained on massive datasets to learn probability distributions, enabling them to understand context and generate new, original content across various formats: text, images, code, audio, and video.

Also read A Big Bang Moment: Top 10 Use Cases of Generative AI in Fintech

The Three Pillars: Core Generative AI Model Types

1. GANs Model

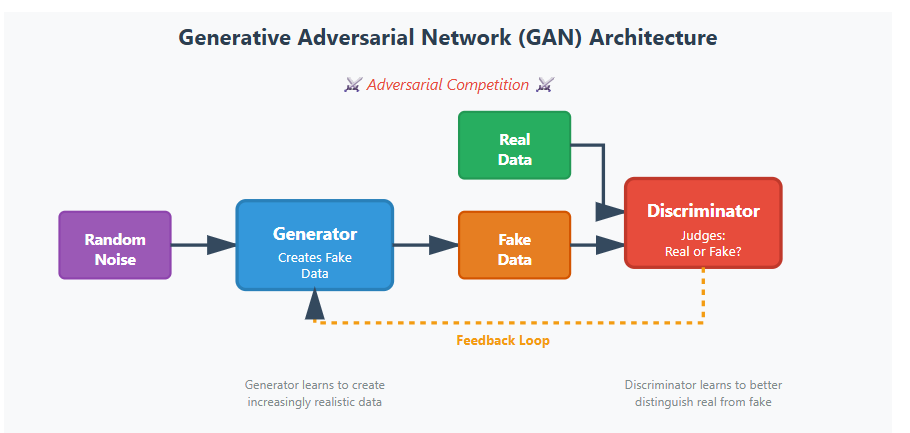

How They Work: GANs operate through an adversarial process involving two neural networks, a Generator and a Discriminator, locked in continuous competition. The Generator creates synthetic data attempting to fool the Discriminator, while the Discriminator evaluates whether data is real or fake. Through repeated cycles, the Generator becomes increasingly skilled at producing realistic outputs.

Best Use Cases:

- Photorealistic image generation

- Image editing and enhancement

- Synthetic data creation for training datasets

- Medical imaging generation

- Style transfer and artistic applications

Strengths:

- Exceptional at creating highly realistic images

- Effective even with limited training data

- Fast generation once training is complete

- Excellent for creating variations of existing content

Limitations:

- Notoriously difficult and unstable to train

- Prone to “mode collapse” where diversity decreases

- Requires careful hyperparameter tuning

- Limited control over specific attributes in generated content

Real-World Applications:

- NVIDIA StyleGAN for photorealistic face generation

- This Person Does Not Exist demonstrations

- Adobe’s content-aware fill in Photoshop

- Super-resolution applications in photo editing

When to Choose GANs: Select GANs when your project demands high-fidelity visual outputs, particularly for image generation, enhancement, or synthetic data creation where realism is paramount.

2. Diffusion Models

Diffusion models learn to reverse a noise-adding process. During training, they gradually add noise to images until only random noise remains (forward process), then learn to remove this noise step-by-step (reverse process). Built on U-Net neural network architecture, they can transform random noise back into realistic data samples guided by learned patterns and text prompts.

Best Use Cases:

- Text-to-image generation

- Advanced image editing

- Medical imaging (denoising, training data generation)

- Video and 3D object generation

- Art creation with complex, detailed prompts

Strengths:

- Superior image quality compared to GANs

- Stable, reliable training process

- Exceptional text-to-image capabilities

- Fine-grained control over generated content

- Handles complex, detailed prompts effectively

Limitations:

- Slower generation due to multiple denoising steps

- Higher computational requirements

- Large model sizes requiring significant resources

- Can struggle with precise text rendering within images

Real-World Applications:

- DALL-E 2/3 by OpenAI

- Stable Diffusion (open-source platform)

- Midjourney for AI art creation

- Adobe Firefly in Creative Cloud

- Canva AI design tools

- Google’s Imagen

When to Choose Diffusion Models: Opt for diffusion models when you need superior image quality, excellent text-to-image conversion, or require fine control over creative outputs. They’re ideal for marketing visuals, creative design, and any application where prompt-based control matters.

3. Transformer-Based Models

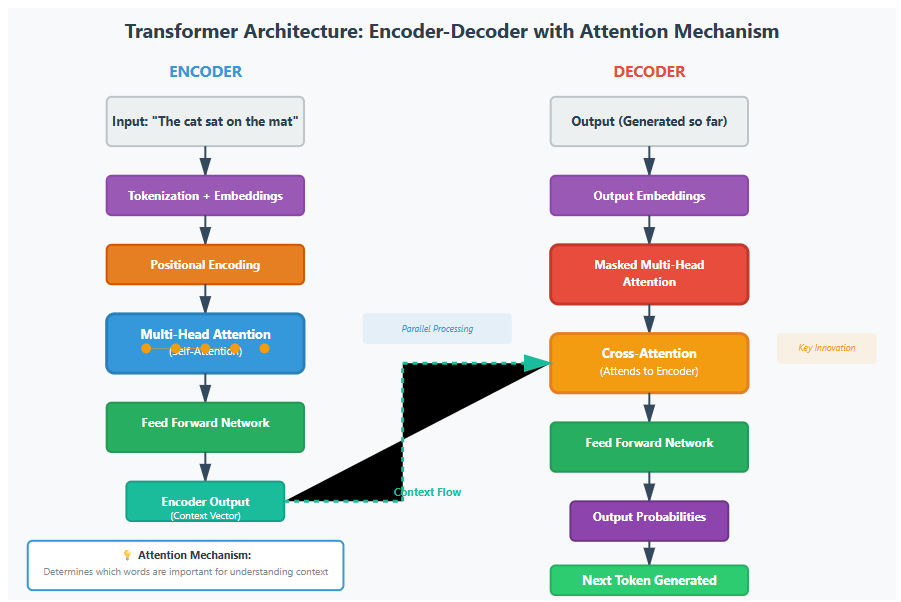

Transformers revolutionized AI through their “attention mechanism,” which determines relationships and relevance between different parts of input data. They process sequential data (particularly text) by understanding context and meaning, transforming input sequences into output sequences. Key components include encoder-decoder structures, multi-head attention, position encoding, and feed-forward networks.

Best Use Cases:

- Natural language generation (articles, reports, code)

- Conversational AI and chatbots

- Machine translation

- Document summarization

- Code completion and generation

- Enterprise knowledge management

- Multimodal AI (text-to-image, text-to-video, text-to-audio)

Strengths:

- Exceptional language understanding and generation

- Handles long-form content effectively

- Highly versatile across multiple domains

- Can be fine-tuned for specific use cases

- Parallel processing of entire sequences

Limitations:

- Massive computational requirements

- Expensive to train and operate

- Can generate plausible but incorrect information (hallucinations)

- Limited by training data cutoff dates

- Context window limitations for very long documents

Real-World Applications:

- GPT-4 and ChatGPT by OpenAI

- Gemini by Google

- GitHub Copilot for code assistance

- Grammarly for writing enhancement

When to Choose Transformer-Based Models: Select transformers when your project involves natural language processing, conversational interfaces, content generation, or code assistance. They’re the backbone of modern LLMs and excel at understanding context and generating coherent, contextually relevant outputs.

Also read Generative AI in e commerce: Use cases and implementation

Beyond the Big Three: Other Model Types Worth Knowing

Variational Autoencoders (VAEs)

VAEs encode data into a compact latent space, then reconstruct it with variations to create new, modified outputs. They’re useful for image reconstruction, drug discovery, and applications requiring controlled data generation.

Autoregressive Models

These models generate content by predicting one element at a time in sequence (like predicting the next word based on previous words). Transformer-based models are actually a type of autoregressive model, forming the foundation of modern language and vision systems.

Flow-Based Models

Using reversible mathematical transformations, flow-based models convert simple data into complex outputs. While they offer precise control, they require significant computational resources, making them suitable for scientific simulations and density estimation.

The Decision Framework: How to Choose the Right Model

Step 1: Define Your Use Case and Requirements

Clarify Your Primary Objective:

- Content creation (text, images, video)?

- Coding assistance?

- Design and creative work?

- Customer support automation?

- Data augmentation or synthetic data generation?

Determine Output Type:

- Text only

- Images only

- Code

- Audio

- Multimodal (combination of formats)

Set Performance Goals:

- High accuracy and quality

- Real-time or low latency

- Consistency across generations

- Detailed, nuanced outputs

Step 2: Evaluate Business Metrics

Cost Considerations:

- Cost per generation vs. manual creation

- Infrastructure and computational costs

- Licensing fees (proprietary vs. open-source)

- Ongoing operational expenses

Time and Scalability:

- Time savings compared to current processes

- Volume scalability requirements

- Speed of deployment

- Training and fine-tuning timeline

Step 3: Assess Technical Requirements

Model Characteristics:

- Model size vs. your infrastructure capabilities

- Performance and accuracy benchmarks

- Latency requirements

- Integration complexity with existing systems

Data and Privacy:

- Data privacy and security requirements

- Industry compliance (GDPR, HIPAA, SOX, PCI-DSS)

- Data residency requirements

- Training data sensitivity

Step 4: Open-Source vs. Proprietary Decision

Open-Source Advantages:

- Full control and customization

- No ongoing license costs

- Community support and transparency

- Flexibility in deployment

Proprietary Advantages:

- Robust vendor support

- Easier integration

- Faster time-to-market

- Regular updates and improvements

- Enterprise-grade security

Step 5: Customization and Fine-Tuning Needs

Determine whether you need:

- Out-of-the-box performance

- Domain-specific fine-tuning

- Custom training on proprietary data

- Ongoing model updates and improvements

Step 6: Testing and Validation

Conduct Practical Tests:

- Test with actual data and prompts

- Evaluate relevance and accuracy

- Assess consistency across multiple runs

- Compare outputs from different models

- Measure generation speed and quality

Establish Success Metrics:

- Define clear KPIs aligned with business goals

- Set baseline performance standards

- Create evaluation frameworks

- Plan for regular assessment cycles

Quick Selection Guide: Matching Use Cases to Models

| Use Case | Recommended Model | Why This Choice |

| Marketing visuals, product images, ad creatives | Diffusion Models (Stable Diffusion, DALL-E, Midjourney) | Superior image quality, excellent prompt control, creative flexibility |

| Customer support chatbots, Q&A systems | Transformer-Based Models (GPT-4, Claude, Gemini) | Natural language understanding, context retention, conversational ability |

| Code assistance and generation | Transformer-Based Models (GitHub Copilot, CodeLlama) | Understanding code context, multi-language support, completion accuracy |

| Photorealistic synthetic data | GANs (StyleGAN, BigGAN) | High-fidelity outputs, efficient generation, realistic variations |

| Medical imaging and healthcare | GANs + Diffusion Models | Privacy-safe synthetic data, high accuracy, domain adaptability |

| Content writing, articles, reports | Transformer-Based Models (GPT-4, Claude) | Long-form coherence, contextual understanding, style adaptation |

| Visual effects and creative art | GANs + Diffusion Models | Ultra-realistic visuals, artistic flexibility, style control |

| Enterprise knowledge management | Transformer-Based Models (LLaMA, domain-specific LLMs) | Handling large text datasets, contextual accuracy, summarization |

Common Pitfalls to Avoid

1. Chasing the Latest Trend

Don’t choose a model simply because it’s the newest or most talked about. Match the technology to your specific needs, not market hype.

2. Underestimating Computational Costs

Advanced models can be expensive to run. Factor in infrastructure, energy consumption, and scaling costs from day one.

3. Ignoring Fine-Tuning Requirements

Out-of-the-box models rarely meet specialized business needs. Budget time and resources for customization.

4. Overlooking Data Quality

Models are only as good as their training data. Poor quality inputs lead to poor quality outputs, regardless of the model’s sophistication.

5. Neglecting Ethical and Compliance Considerations

Ensure your chosen model aligns with ethical AI principles and regulatory requirements in your industry and region.

6. Skipping the Pilot Phase

Always start with low-risk, high-value use cases. Prove the concept before scaling across the organization.

Conclusion: Making the Smart Choice

Choosing the right generative AI model isn’t about finding the “best” model, it’s about finding the best model for your specific project. Start by clearly defining your use case, understanding your constraints, and matching capabilities to requirements.We at Mindster can help you with this.

Remember these key principles:

- Prioritize business outcomes over technological novelty

- Test thoroughly before committing

- Plan for customization and fine-tuning

- Consider total cost of ownership, not just upfront costs

- Build in flexibility for future model evolution

- Establish robust governance and monitoring processes

The generative AI revolution is here, and it’s transforming how we work, create, and innovate. By making informed, strategic choices about model selection, you position your project—and your organization, for success in this exciting new landscape.

The right model isn’t the most powerful or the most popular. It’s the one that reliably delivers value for your specific use case while fitting within your technical, budgetary, and operational constraints. Take the time to choose wisely, and you’ll build a foundation for long-term AI success.

- Agentic AI1

- Android Development3

- Artificial Intelligence37

- Classified App3

- Custom App Development5

- Digital Transformation12

- Doctor Appointment Booking App14

- Dropshipping1

- Ecommerce Apps40

- Education Apps2

- Fintech-Apps38

- Fitness App4

- Flutter4

- Flutter Apps20

- Food Delivery App5

- Grocery App Development1

- Grocery Apps3

- Health Care10

- IoT2

- Loyalty Programs10

- Matrimony Apps1

- Microsoft1

- Mobile App Maintenance2

- Mobile Apps131

- On Demand Marketplace1

- Product Engineering6

- Progressive Web Apps1

- React Native Apps2

- Saas Application2

- Shopify9

- Software Development3

- Taxi Booking Apps7

- Truck Booking App5

- UI UX Design8

- Uncategorized7

- Web App Development1

Comments